LoRaWAN Soil Sensor GenAI Project (Part 2)

Now, let's continue to the second part of this project. Now with the sensor data successfully being picked up on the LoRaWAN Gateway, the goal is to write a script that will transmit the uplink data to the Nano Orin.

In the diagram above lestat-lives is the name for my device, the physical LoRaWAN Soil Sensor, below that lives in my basil plant. Notice the pins that are able to dig into the soil, and the absence of any coord or cables for "batteries". The device has a life of 8 years and is low power, emitting data approximately every 15 minutes and can carry distances up to 8 km.

Data Connectors

There are two components of software. The first is the Dragino Gateway. It lives on my local network and is calling the WiFi of the Gateway which, as you can see, lives in 10.130.1.1. Here, the Gateway coordinates all the network signals to make sure the soil sensor is being detected (green check mark LoRaWAN).

The second component is the open source "The Things Stack Sandbox" or TTN (used to be called The Things Network, hence the 'N'). In the screenshot you'll see the live data coming in from the soil sensor, ever since I hooked it up three days ago. It's faithfully been tracking data from the basil plant. You can see below the data points for the "uplink" data.

Clicking on any uplink data in the console shows the actual data we want:

"uplink message": {...

"decoded_payload": {

"Bat": 3.594,

"Hardware_flag": 0,

...

"TempC_DS18B20": "327.60",

"conduct_SOIL": 5,

"temp_SOIL": "16.73",

"water_SOIL": "4.96"

}

Knowing that it's the uplink data we need, the question becomes, how do we send it to the Orin? Luckily, there are a few endpoints in TTN that make it easier than I thought.

Batch API Pull (Historical Data)

In the end, this is what I chose. Mainly, for ease, but also for practical reasons. This LoRaWAN sensor, by design, does not emit data in constant, streaming fashion. It only sends uplink data every 15 minutes or so. Hardly, a reason to run a message broker that will in real-time pass the data to the Orin, which would be the MQTT use case. Now, if I wanted streaming data to be parsed in real-time, then use, we would need MQTT.

So, we can suffice for a batch of historic data that is set to be refreshed from the last 12 hours. The curl request below with the API I generated from inside TTN, does the trick. However, what about keeping a running file of all the data - even if it goes outside the 12 hour window? Let's take a look at the Python code in detail.

The first thing is to load any existing data from the records (other .json) data that was previously appended to. Second, grab historical data via curl that picks up sensor data from the past 12 hours. Third, compare the existing data just curled to what's in the existing records, making sure there are no duplicates by using the timestamp as the deconflicter. Fourth, save the updated data by appending it to the records. I then run a 15-minute cron job to run the script again for the latest record off the top.

def run_collection(self):

"""Main collection process"""

print("Jetson Orin Soil Sensor Data Collector")

print("=" * 50)

# Step 1: Load existing data

print(f"Step 1: Loaded {len(self.data)} existing records")

# Step 2: Fetch historical data from API

print("\nStep 2: Fetching historical data from API...")

api_records = self.fetch_historical_data()

if not api_records:

print("No new data available")

return

# Step 3: Compare and reconcile

print("\nStep 3: Comparing and reconciling data...")

new_count = self.compare_and_reconcile(api_records)

# Step 4: Save updated data

if new_count > 0:

print(f"\nStep 4: Saving {new_count} new records...")

self.save_data()

else:

print("\nStep 4: No new records to save")

print(f"\nFinal status:")

print(f" Total records: {len(self.data)}")

print(f" New records added: {new_count}")

All the code for the above is here: https://github.com/nudro/Lora-GenAI/blob/main/Documents/Lorawan/edge/orin_soil_collector.py

MQTT

I'm new to MQTT, so I'll let Gemini do the talking here:

MQTT is a lightweight, publish/subscribe messaging protocol ideal for machine-to-machine communication, especially in the Internet of Things (IoT). It is designed to work over TCP/IP, allowing devices with limited power and bandwidth to send and receive data reliably. This is achieved through a decentralized model where clients publish messages to topics and other clients subscribe to those topics to receive messages from a central broker.

In my diagram below, the idea is that soil-sensor-saranac@ttn serves as the broker, using paho-mqtt as the Python library that stands up the MQTT server on the local Ubuntu machine. The Orin subscribes to the TTN MQTT server by accessing it on the IP the local machine is connected on. It listens for any new data coming in.

You'll notice that most of the code is the same, except, that it adds a client=mqtt.Client() call which upon connecting, subscribes to the server via client.subscribe("v3/soil-sensor-saranac/devices/lestat-lives/up"). Upon receiving the message, parses it for the uplink data. And closes the connection upon disconnecting. I didn't continue with this script very much, per the reasons I stated earlier, so there are some problems with the logic. Mainly, if the historical data is pulled, reconciled and deconflicted, then appended to the stored data, the latest data that comes off the top won't be so fast that it requires a MQTT listener.

def main():

print("Jetson Orin Hybrid Soil Sensor Data Collector")

print("=" * 60)

collector = OrinHybridCollector()

# Step 1: Fetch historical data on startup

print("Step 1: Fetching historical data on startup...")

historical_count = collector.fetch_historical_data()

if historical_count > 0:

collector.save_data()

print(f"Historical data collection complete: {historical_count} new records")

else:

print("No new historical data found")

print(f"\nCurrent total records: {len(collector.data)}")

# Step 2: Start MQTT real-time collection

print("\nStep 2: Starting MQTT real-time collection...")

client = mqtt.Client(mqtt.CallbackAPIVersion.VERSION2)

client.on_connect = on_connect

client.on_message = on_message

client.on_disconnect = on_disconnect

client.user_data_set(collector)

# MQTT settings

mqtt_host = "YOUR_LOCAL_COMPUTER_IP" # Replace with your local computer's IP address

mqtt_port = 1883

mqtt_username = "soil-sensor-saranac@ttn"

mqtt_password = "YOUR_MQTT_PASSWORD"

print(f"Connecting to MQTT broker at {mqtt_host}:{mqtt_port}")

The code for this script is here: https://github.com/nudro/Lora-GenAI/blob/main/Documents/Lorawan/edge/orin_hybrid.py

Alright so far, that's it! Here's a TikTok video showing the whole thing a bit more dynamically and proving that it's indeed receiving the data.

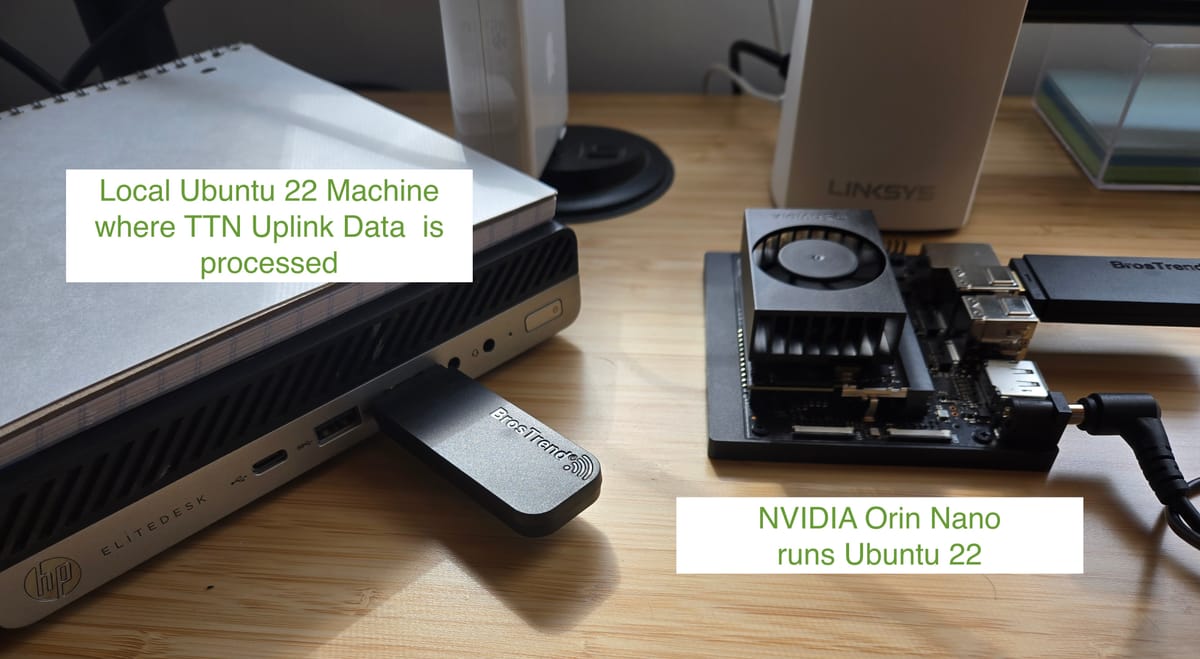

@mybuddyskynet The key building blocks are: 1) Sensor (device) 2) Gateway, receives and parses signals 3) API key that fetches historical data Or 4) paho-mqtt to act as a local server of which your edge decide can call via IP 5) some python scripts for parsing and storing the data as json Regardless of the IoT device. For me, both the local and edge are running Ubuntu 22 https://github.com/nudro/Lora-GenAI/tree/main/Documents/Lorawan #coding #deeplearning #AI #machinelearning

♬ оригинальный звук - junior_gd