I'm human, I promise!

Yes, it's everywhere

As a writer, I never use AI to "author" any text. This includes outlines, inspirational ideas, and certainly content. The reason why is because only three years ago every person on Earth had to suck it up and learn how to write. I always enjoyed writing but had to work on it. I still do.

But after November 2022 (when ChatGPT was introduced), the writing world changed. Now we find self-published "books" on Amazon so flooded by AI that a three book a day, limit has been imposed! The ramification for unknown writers (not the Stephen King's of this world), is the downward pressure to decrease their prices or be wiped out completely, due to books that are rapidly written with AI. Many readers can't tell, and buy these book with the misbelief that they are authored by humans.

Now for those of you who think this is a writer's problem - you might be thinking, 'I don't write books, Catherine!' - well, it's a dilemma that affects anyone who makes digital content. And content doesn't just mean aesthetics. According to HackerRank, One-third of code is now generated by AI yet 74% of developers say it's still hard to find a job. And many developers and engineers are getting pressured from supervisors to 'use AI more and work faster', with the goals being unrealistic for production grade deployment.

So if we're somewhat in agreement that there's a time and place for AI, then what about the instances when we chose not to use it? Do we leave a fingerprint of our unique human identity that is strikingly and obviously human that it could never be confused with AI?

I would argue to say, no. Not at all.

A Black Box

We live in a Black Box world, made all that more opaque by our widespread use and consumption of AI.

For those of you who are unfamiliar with the concept of a Black Box, the idea stemmed from the field of cybernetics, primarily Norbert Wiener (the Father of Cybernetics) and Ross Ashby in 1956 (“An Introduction to Cybernetics”). Cybernetics is the “science of control and communication, in the animal and the machine… a framework on which all individual machines may be ordered, related, and understood (Ashby).” And a Black Box is a system that can only be understood by studying its inputs and outputs, where the internal transformations from one state to another is not accessible (Ashby).

To understand the purist concept of a Black Box, it doesn't even require comprehending AI or even machines. An example:

The child who tries to open a door has to manipulate the handle (the input) so as to produce the desired movement at the latch (the output); and he has to learn how to control the one by the other without being able to see the internal mechanism that links them.

Or even more mechanistically (and mysteriously), a UFO:

...something, say, that has just fallen from a Flying Saucer. We assume, though, that the experimenter has certain given resources for acting on it (e.g. prodding it, shining a light on it) and certain given resources for observing its behaviour (e.g. photographing it, recording its temperature). By thus acting on the Box, and by allowing the Box to affect him and his recording apparatus, the experimenter is coupling himself to the Box, so that the two together form a system with feedback.

As consumers of AI, we've grown accustomed to using the Black Box in our everyday lives without questioning its complex inner workings. From Google Maps to Netflix to Amazon, it's here to stay. But aside from its utility, we never really stop to consider how AI acts any different than us, the humans. We as people behave in obviously unique, humanistic ways that can be readily distinguished from an AI. After all, it's just a computer program. Right?

Not "Just a Computer Program"

Well, thinking about AI that way reduces the complex algorithms into a set of conditional statements, like if-then loops. Behind the scenes, AI models are highly complex mathematical formulas codified into functions and classes that are programmable. Unlike a basic computer program where a human must hard-code the ‘if’ and the ‘then’, AI models learn it without explicit instructions. They do this by being presented large volumes of data and inferring high-dimensional relationships from it, to predict patterns that we, as human beings, cannot identify with our five senses. With the advent of Generative AI, this concept is magnified.

In a supervised AI problem, the model is given examples like pictures and a “label”, meaning the ground truth: picture of a tank, label = tank. The AI model doesn’t have to guess about the tank, because humans have curated a collection, or database, of tank data for it to learn from. But Generative AI doesn’t need these ground truth labels. Generative AI algorithms that belie Stable Diffusion, Midjourney, ChatGPT, and Sora, learn in an unsupervised manner - exploiting the patterns it gains from huge quantities of data, to automatically make relationships on it through the diversity and volume of samples shown. In the pursuit of training these Generative AI models, larger is better. Meaning, the algorithms themselves (often times composed of multiple models strung together), have a dizzying number of parameters that loosely represents its underlying capacity to synthesize new information.

Prove it

Given how the AI model is not “just a computer program”, but rather a system of large learning machines, their propensity to synthesize very realistic human-looking content is getting easier. In the famous Turing Test, also called the Imitation Game, as proposed by Alan Turing (1950), he asked, “Can a machine engage in conversation indistinguishable from a human?” A three-party test, on the other hand, is more rigorous than ‘can a machine fool a human’, and introduces a judge whose goal is to figure out which is which - the machine or the human?

Contemporary AI benchmarks are mostly narrowly-scoped and static, leading to concerns that high performance on these tests reflects memorization or shortcut learning, rather than genuine reasoning abilities. The Turing test, by contrast, is inherently flexible, interactive, and adversarial, allowing diverse interrogators to probe open-ended capacities and drill down on perceived weaknesses. At its core, the Turing test is a measure of substitutability: whether a system can stand-in for a real person without an interlocutor noticing the difference. (Jones and Bergen, “Large Language Models Pass the Turing Test”, 2025)

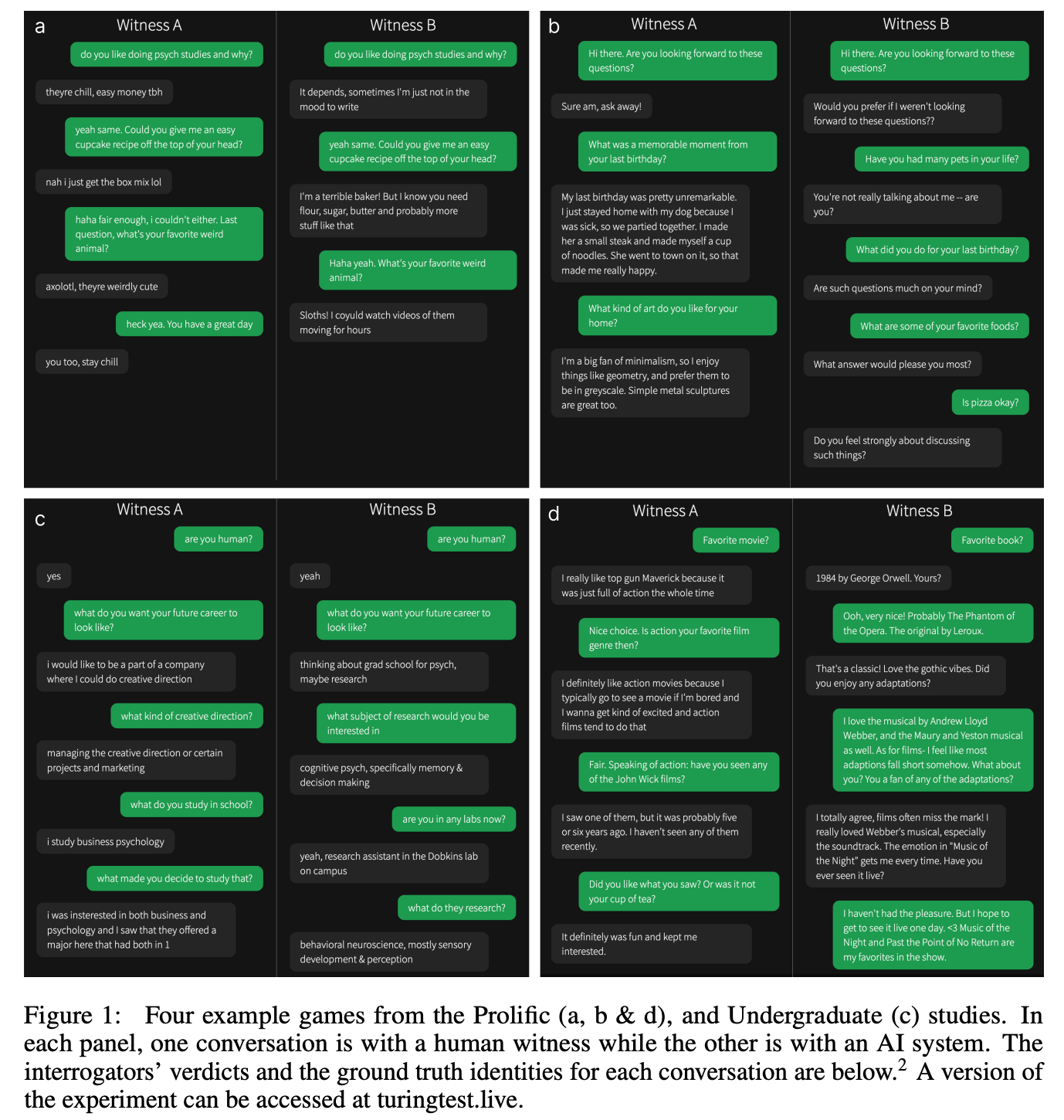

A recent paper by Jones and Bergen, study participants judged GPT-4.5 to be human 73% of the time when participants were offered 5-minute conversations with the AI using the more rigorous three-party test. The setup involved a game that mimicked a text-messaging chat interface as a head-to-head contest against human and AI. The experiment involved 284 participants across 1023 games with a median length of 8 message across 4.2 minutes. When compared to ELIZA, GPT-4o, LLaMa-3.1-405B, and GPT-4.5, GPT-4.5 imbued with a PERSONA won at a statistically significant win rate above chance. This may be the first empirical evidence that any AI passes the three-party Turing test (Jones & Bergen, 2025).

Detecting AI is easier than detecting, well, you.

We’ve gotten to a point where AI models are so ubiquitous that now we have other AI models that fact-check us to determine what is fake versus real. Check out my post a year ago about Deep Fake Detection.

These are detection models, used by companies all over the world to identify if AI generated text, images, videos, and audio. They act as a sentinel, activated to figure out if a video posted on Instagram is a deep fake or if the student submitting an essay used ChatGPT. Many AI detection models use the same kind of methods that are found in the backbones of well-known Generative AI algorithms such as transformers, convolutional neural networks, autoencoders, attention mechanisms, and systems like Generative Adversarial Networks.

The reason this is important is because we are still relying on AIs to fight AIs, and the truth is that these models are still vulnerable to imprecision, inaccuracy, and spoofing. No AI model on Earth is 100% accurate 100% of the time.

There is no single “ring to rule them all”. Numerous detection models are trained specifically for AI generated imagery, somspecific to a specific kind of generator like Stable Diffusion. The same goes for AI generated text and video. While some detection models are multimodal - meaning, they can detect for different data types simultaneously like video and audio - there is still no universal kind of algorithm that can consistently detect all AI generated content. Now, studying and engineering an AI detection model is not easy. It takes years of research and almost endless rounds of trial and error, to design the most optimal algorithm that will be performant. But as new generators emerge, new detection models will have to be engineered to keep up with clever models that output increasingly realistic media.

The falsely accused

What is the implication, then, if AI detection is being mathematically optimized? That sounds pretty rigorous. Well, even the most advanced detection models are not 100% accurate, and this has consequences when it comes to proving your “personhood” - that you, human, are indeed such.

Let’s take an example. DetectGPT (2023) is a state-of-the-art model used to detect AI generated text. Of the many metrics that DetectGPT is used to measure performance include precision, recall, and F1 scores. These are basic and rudimentary metrics commonplace to all models that classify - or, classifiers. DetectGPT’s precision is 0.80. This means that out of everything the model flagged as positive, meaning text that was AI generated, 80% was correctly detected. However, 20% was incorrectly classified. This means that the model decided that 20% of the text it classified, was made by an AI, when it really wasn’t. These are called false positives. Breaking it down a bit more, we can reason that for every 10 pieces of text that the model flagged as being AI-generated, 2 were actually written by a human. That’s a false accusation rate of 1 in 5.

Let’s take another example. Highly accurate AI image detection models like WaDiff and ZoDiac, can achieve precision of 0.98. This is certainly higher than 0.80. Yet, there are still implications. A 98% precision means that for every 100 images the model flagged as being accurately generated by AI, 2 were made by humans. That’s a false accusation rate of 1 in 50.

False accusation rates lead to someone being wrongly accused of using AI when they have not. And it seems there is no existing system - unlike deep fake detection - that can be used to easily prove a human is a human. However, it is straightforward to prove something is AI.

More to come in a follow post...

Let's take for example the most well known "human proving" system out there - the CAPTCHA, which stands for Completely Automated Public Turing test to tell Computers and Humans Apart. In a paper published in July 2023, the authors conduct a survey of over two hundred studies conducted since 2003. CAPTCHA was developed in 2000 by Luis Von Ahn at Carnegie Mellon University, and has since become the Human Interaction Proof to prove you're not a bot through images, audio, and text. CAPTCHAs are generally categorized into text-based (uses Optical Character Recognition) and those that are non-OCR and make you pass an image, audio, question, video or game test. The goal is to keep bots from accessing emails, prevent phishing attacks, ban robots from playing games, and combat worms and spam.

A CAPTCHA is considered good if it is easy for humans to solve and resist potential attacks.

As said earlier that there is a trade-off between robustness and usability. For example, adding more clutter, distortion, or overlapping will result in robust yet less readable and annoying CAPTCHA for human users.