Basic cropping with PIL and PyTorch

I'm embarrassed to say that even at this point in my life, I still have to look up this formatting to crop an image. Once and for all, I'll post it here so that I don't forget it and also in case you're looking for it, you found it.

PIL

from PIL import Image

from IPython.display import display

pil_im = Image.open('1_003_1_01_NN_V.bmp')

display(pil_im)

# original dims

width, height = pil_im.size

print(width, height)

# resize

newsize = (256, 256)

im1 = pil_im.resize(newsize)

print(im1.size)

display(im1)

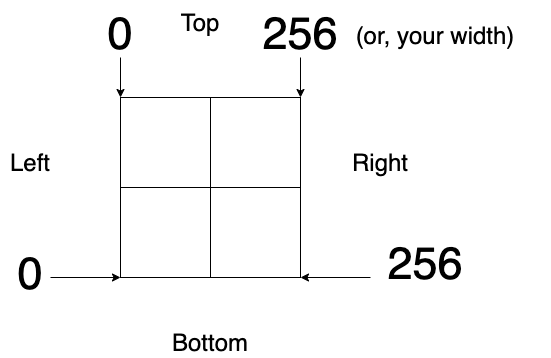

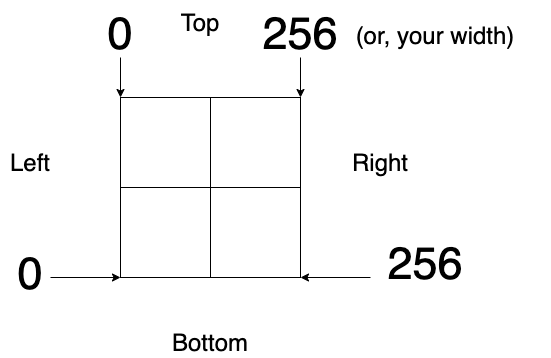

Let's crop this into even quadrants. With the 256 x 256 image, crop out even sections that are 128 x 128 each. Knowing where 0 (zero'th) pixel starts and where the 256th pixel ends is key to setting crop points.

# Setting the points for cropped image

left_A = 0

top_A = 0

right_A = 256 - 256/2

bottom_A = 256 - 256/2

left_B = 256/2

top_B = 0

right_B = 256

bottom_B = 256 - 256/2

left_C = 0

top_C = 256 - 256/2

right_C = 256 - 256/2

bottom_C = 256

left_D = 256 - 256/2

top_D = 256 - 256/2

right_D = 256

bottom_D = 256

Crop the points on the original image.

A = im1.crop((left_A, top_A, right_A, bottom_A))

B = im1.crop((left_B, top_B, right_B, bottom_B))

C = im1.crop((left_C, top_C, right_C, bottom_C))

D = im1.crop((left_D, top_D, right_D, bottom_D))

# Shows the image in image viewer

display(A)

display(B)

display(C)

display(D)

PyTorch Tensors

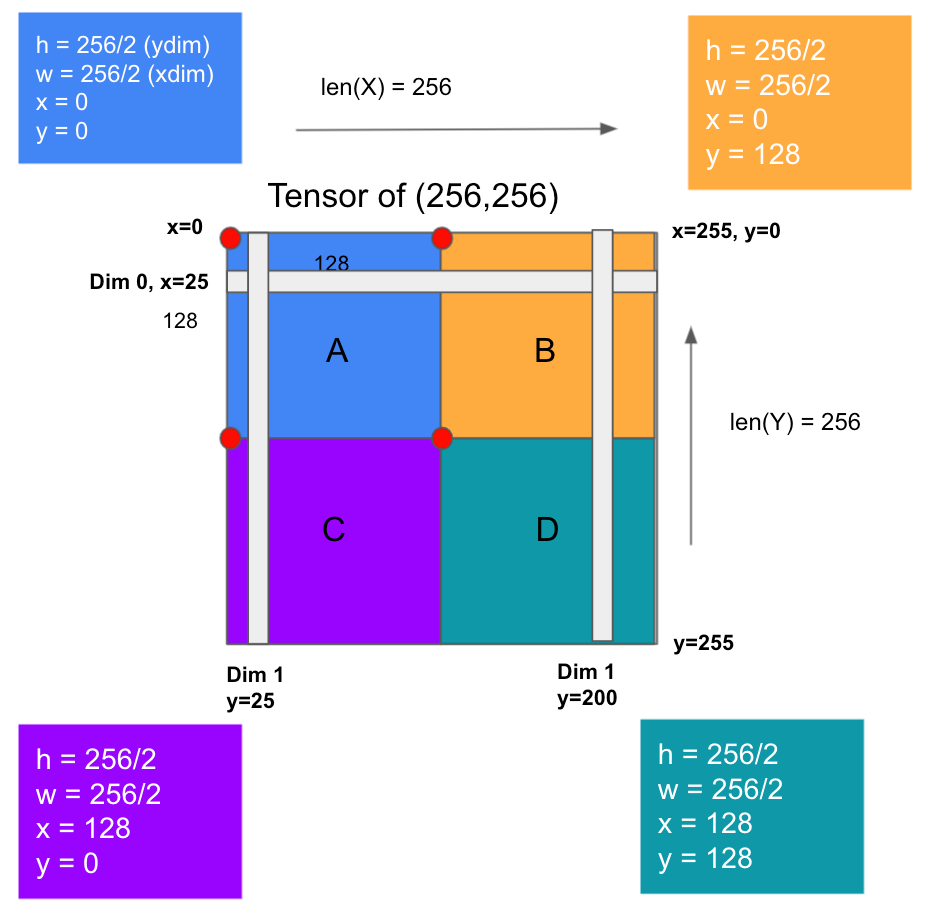

Let's say we want to crop 4 patches on an image called X transformed into a PyTorch Tensor returned from the Dataloader. You can achieve this easily

# where opt.img_width, opt.img_height = 256, 256

class ImageDataset(Dataset):

def __init__(self, root, transforms_=None, mode="train"):

self.transform = transforms.Compose(transforms_)

self.files = sorted(glob.glob(os.path.join(root, mode) + "/*.*"))

def __getitem__(self, index):

img = Image.open(self.files[index % len(self.files)])

img = self.transform(img)

return {"X": img}

def __len__(self):

return len(self.files)

transforms_ = [

transforms.Resize((opt.img_height, opt.img_width), Image.BICUBIC),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)),

]

dataloader = DataLoader(

ImageDataset(root = "data/%s" % opt.dataset_name,

transforms_=transforms_),

batch_size=opt.batch_size,

shuffle=True,

num_workers=opt.n_cpu,

drop_last=True,

)

#####

for epoch in range(opt.epoch, opt.n_epochs):

for i, batch in enumerate(dataloader):

X = Variable(batch["X"].type(Tensor))

# do cropping

# (x,y) = (0,0)

A = X[:, :, 0:0+opt.img_width//2, 0:0+opt.img_height//2]

# (x,y) = (0, 128)

B = X[:, :, 0:0+opt.img_width//2, 128:128+opt.img_height//2]

# (x,y)=(128,0)

C = X[:, :, 128:128+opt.img_width//2, 0:0+opt.img_height//2]

# (x,y) = (128,128)

D = X[:, :, 128:128+opt.img_width//2, 128:128+opt.img_height//2]

Why might you want to use crops? In this case, I've been using different sizes and number of crops as patches to pass through a contrastive loss function. Having control at a tensor-level can allow some more automatic ways of resizing, dividing, and scaling crops for a variety of experiments.