Simple PIL Image Cropping

This is a 60 second read, if that.

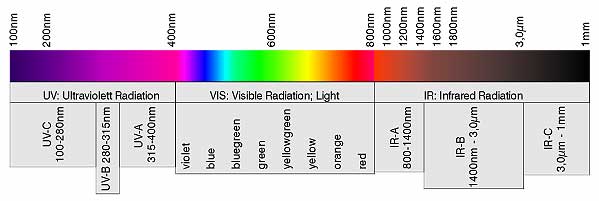

Detecting emotions from thermal imagery is an active area of research in Artificial Intelligence (AI), specifically in the field of Facial Emotion Recognition (FER). FER uses thermal imagery, such as long-wave infrared (LWIR), as an image modality that can provide information unseen in the visible spectrum such as physiological response to stimuli, indicating stress or pain.

Researchers have found that thermal imagery is a reliable technique for non-invasive signs of emotional arousal, and have asserted that thermal imagery could be used as “an atlas of the thermal expression of emotional states”. As a result, thermal imagery has been researched as a promising non-invasive method to detect pain, emotional states, stress, and even deception. Thermal imagery visualizes the detection of radiation emitted from the surface of skin and can be used as a signal of physiological changes due to autonomic nervous system arousal to various stimuli.

Lately I've been spending a lot of time researching the availability of thermal facial expression databases. There aren't many and the field is rather limited. Facial recognition databases are completely different, as those are databases with multiple lighting conditions and head poses, with occlusion (e.g. glasses) but infrequent labels for expressions. An expression database, on the other hand, is what FER 2013, AffectNet, CK+, and MultiPIE are; each frame or static image has a discrete/basic affect labels.

Some of these visible spectrum databases are also spontaneously labeled, meaning elicited using social cues like videos or image viewing, in order to prompt natural reactions and not posed/fake images which are hotly contested in the affect computing and emotional theory world.

The Iris Database

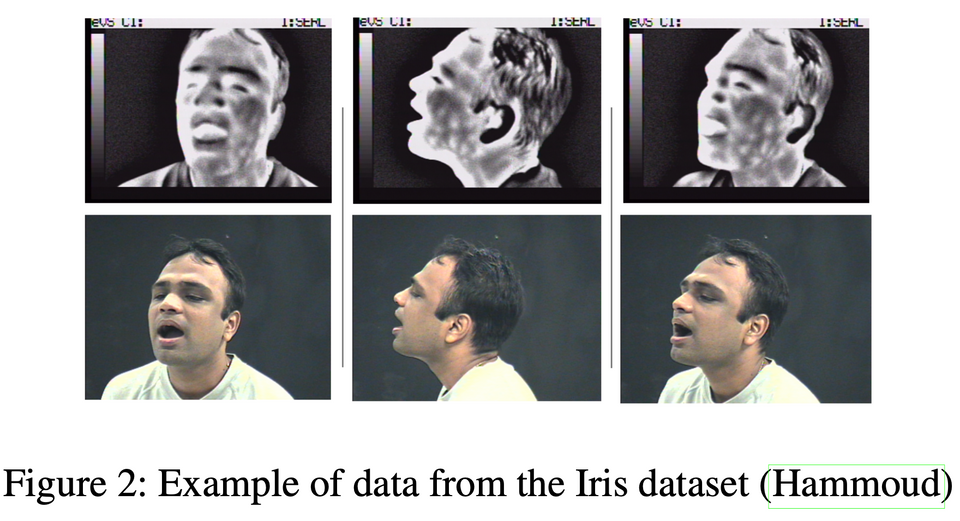

For the beginnings of some research, I've started to explore what these publicly available thermal facial recognition databases have to offer. One example is the Iris dataset (IEEE OTCBVS WS Series Bench; DOE University Research Program in Robotics under grant DOE-DE-FG02-86NE37968; DOD/TACOM/NAC/ARC Program under grant R01-1344-18; FAA/NSSA grant R01-1344-48/49; Office of Naval Research under grant #N000143010022.). This post is just a short one, that describes required image processing of these images for computer vision algorithms.

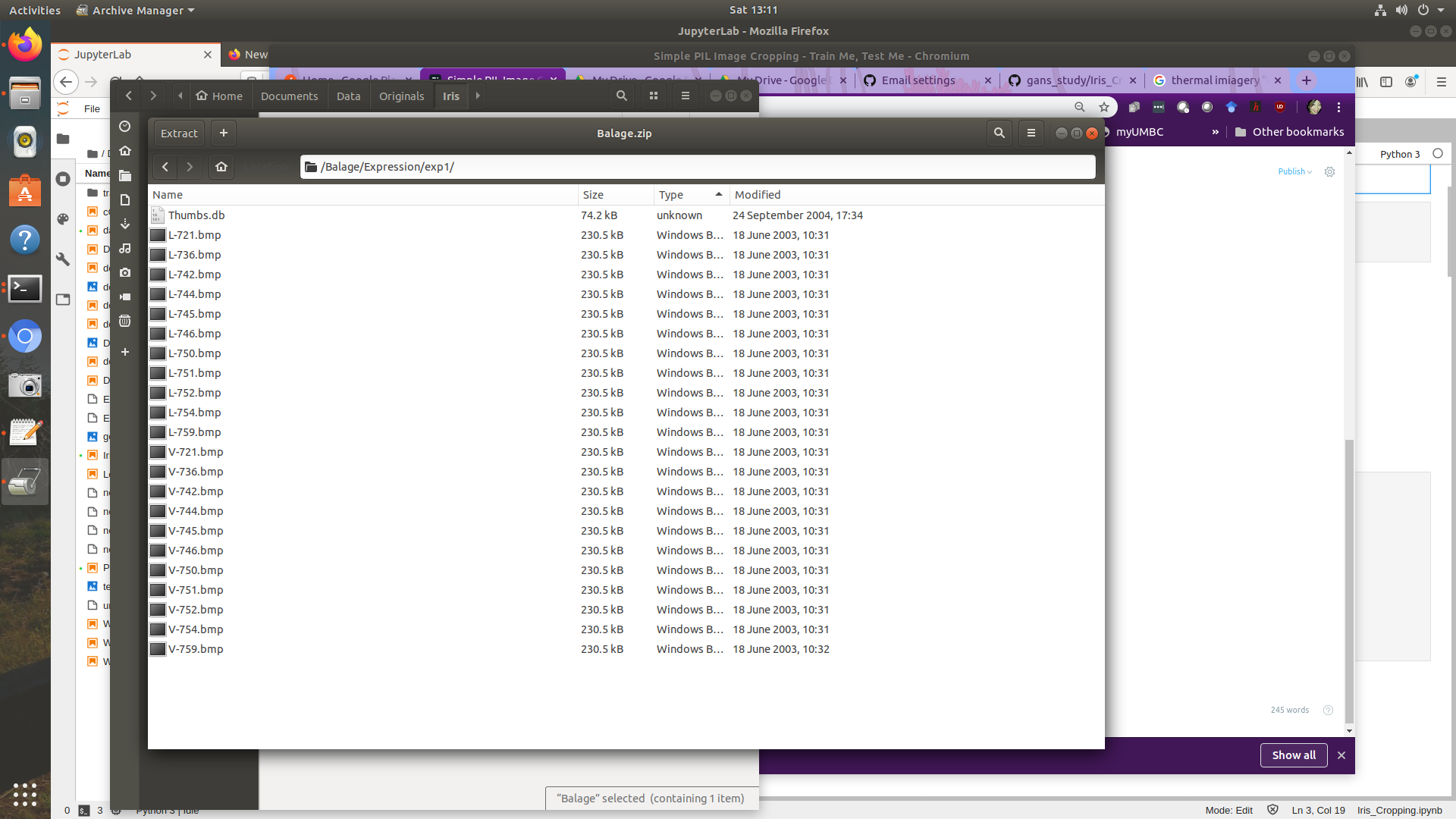

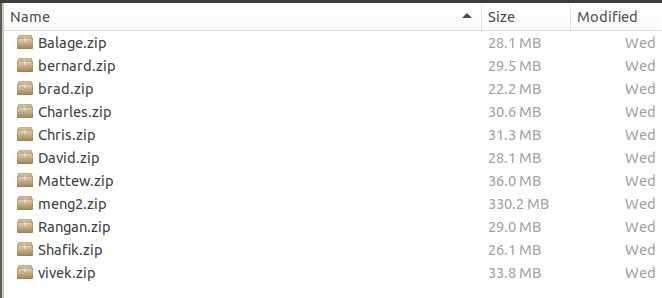

When you download the Iris dataset, there are 11 subjects, all men. Each subject has an Expression and Illumination directory - well most of them, save one.

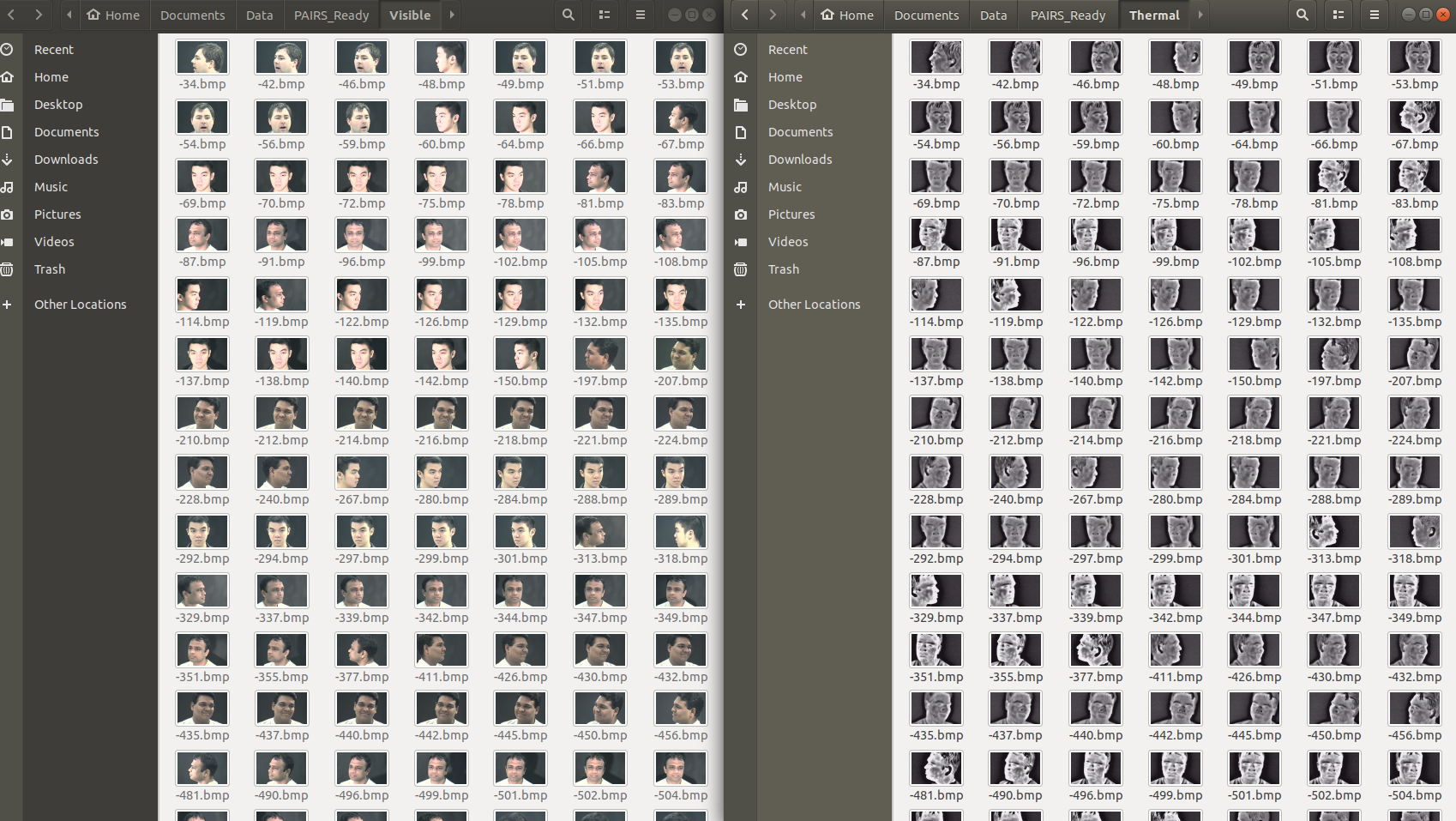

Each of THOSE directories have sub-directories. For my purposes, I actually need to organize the data into an Iris/Thermal and Iris/Visible directory. Later, I'll probably need to manually divide non-frontally aligned versus frontally-aligned. But for the first sweep, I needed to assign unique file names to the approximately 1921 x 2 images. You would think they're already uniquely named. Sadly, no.

That's ok, with some hackiness, I just used the rename library in Linux to assign random number filenames to ensure all the 1921 files had unique names, and were properly uniquely paired between the thermal and visible pair. I used many derivations of:

rename 's/^/10_/' * which here means to rename all files in the directory with 10 before the file name.

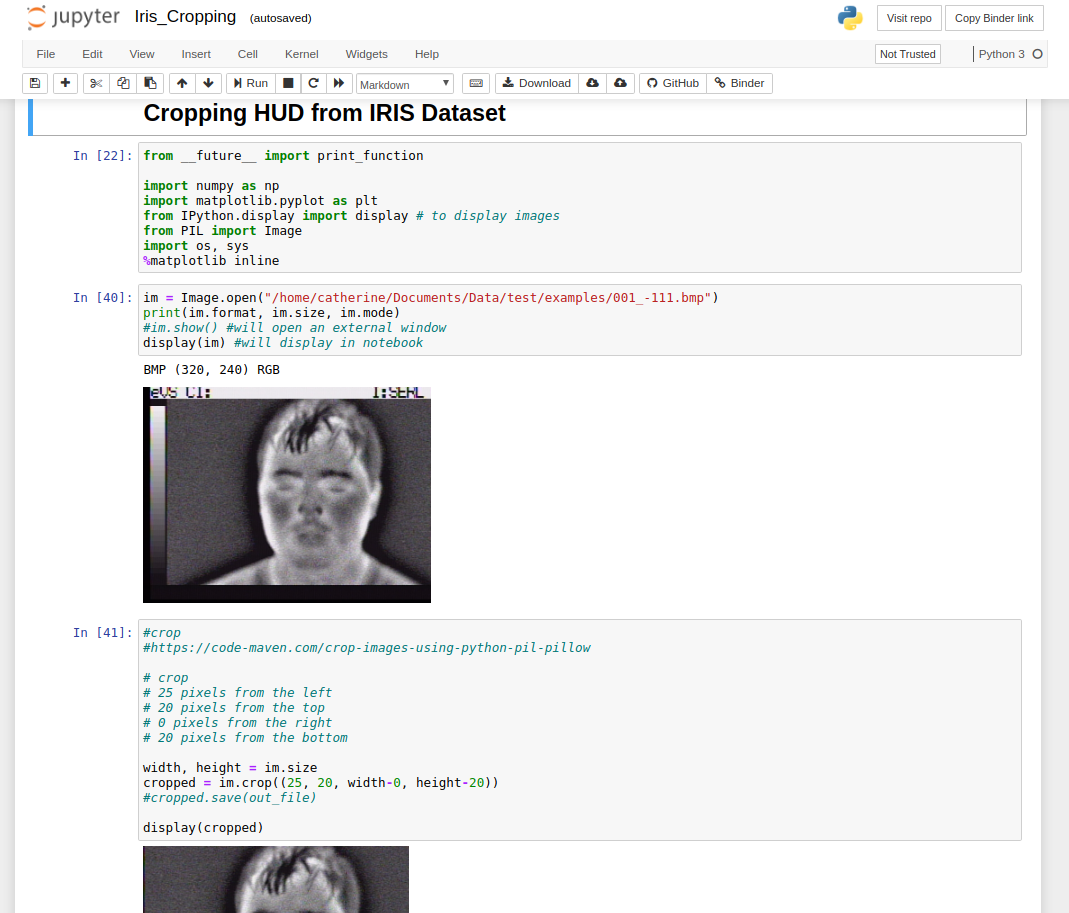

Cropping

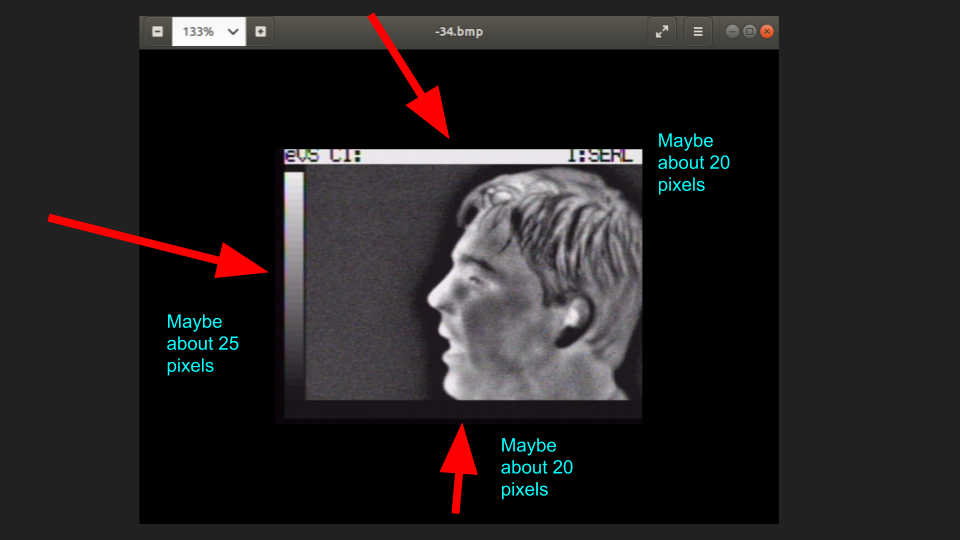

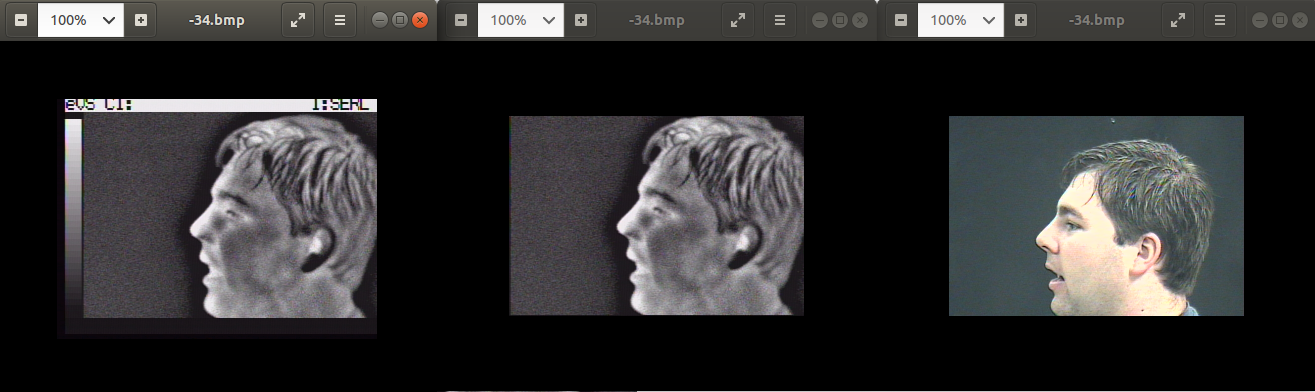

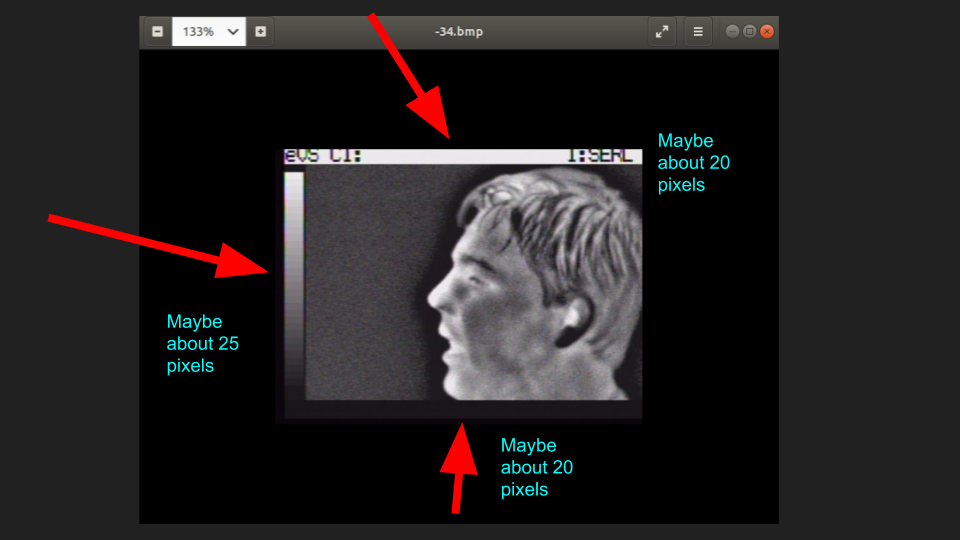

You might notice in the below picture that the far left image is one of the Iris images, but there is something that I call "HUD" surrounding the top, left, and bottom - Heads Up Display.

What we need to do is remove these HUD artifacts. Having worked on defense related CV projects, I can attest that these artifacts interfere with learning.

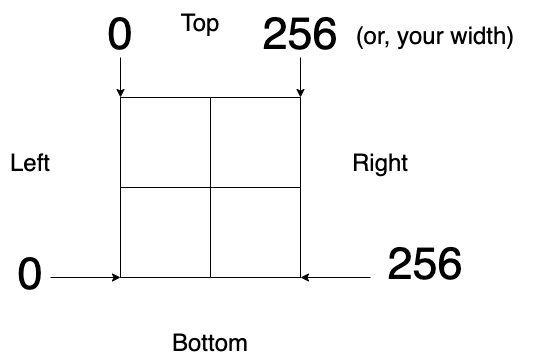

Here's the main idea:

#25 pixels from the left

#20 pixels from the top

#0 pixels from the right

#20 pixels from the bottom

width, height = im.size #320, 240

cropped = im.crop((25, 20, width-0, height-20))

The interactive notebook is here on Binder:

https://mybinder.org/v2/gh/nudro/gans_study/824115fe72b0ce708ee4fec1cd0c4b86dbe10aa9