Amazon Food Reviews with Keras

I'm teaching myself TensorFlow and Keras. The entire principle of using data flow graphs and deep learning is really fascinating, but it's a learning curve for sure. I've been poking around the MNIST tutorial, and tried my hand at hacking some TensorFlow scripts like this. But after being pressed for time, I decided today to try to run my Amazon text classification project in Keras, which I heard is a little bit more approachable. Also, evidently Keras will be the first high-level library added to core TensorFlow at Google, which will effectively make it TensorFlow’s default API.

"Keras is a high-level neural networks API, written in Python and capable of running on top of either TensorFlow or Theano. It was developed with a focus on enabling fast experimentation. Being able to go from idea to result with the least possible delay is key to doing good research."

So, while I get better up to speed on TensorFlow and as I learn more step by step on the basic theory and math of deep learning, let's kick the tires on Keras. I referenced code and learned from these scripts: fchollet and MLM.

import time

from time import time

import keras

from keras.datasets import reuters

from keras.models import Sequential

from keras.layers import Dense, Dropout, Activation

from keras.layers import LSTM

from keras.preprocessing.text import Tokenizer

import tensorflow as tf

from keras.models import load_model

#Read in data

#read pickle from previous blog post Part I

dir_name = "...data"

data = pd.read_pickle('amz_data.pkl')

#normalize date time

data2 = data.copy()

data2['datetime'] = data2['Time'].map(lambda x: (datetime.datetime.fromtimestamp(int(x)).strftime('%Y-%m-%d %H:%M:%S')))

data2['datetime'] = pd.to_datetime(data2['datetime'])

Now, at this point, I had read enough about TensorFlow that I realized I could do the preprocessing, vectorization of the text using TensorFlow's: learn.preprocessing.VocabularyProcessor() which made life a little easier up front.

#train/test split 80/20

X_data, y_target = data2['text_cln'], data2['Score']

y_target = y_target.values

X_datatrain, X_datatest, y_train, y_test = train_test_split(X_data, y_target, test_size=0.2, random_state=0)

Now, at this point a part of understanding what's going on is what alot of the terminology means. What I did here to set the max document length is just did a quick spot check of how many words are in a few of the Amazon reviews, looking for the max length. So, I did:

data2 frame: data2['text_cln'].iloc[10]

This shows that at index-10, there are 743 words in this comment, I just bumped it up to 800. Again, still learning here, so this could have been one parameter that needs to be better tuned to increase accuracy.

# Process vocabulary

learn = tf.contrib.learn

MAX_DOCUMENT_LENGTH = 800

vocab_processor = learn.preprocessing.VocabularyProcessor(MAX_DOCUMENT_LENGTH)

start_time = time.time() #timing it

x_train = np.array(list(vocab_processor.fit_transform(X_datatrain)))

print("--- %s seconds ---" % (time.time() - start_time))

x_test = np.array(list(vocab_processor.transform(X_datatest)))

print("--- %s seconds ---" % (time.time() - start_time))

n_words = len(vocab_processor.vocabulary_)

print("--- %s seconds ---" % (time.time() - start_time))

print('Total words: %d' % n_words)

print(len(x_train), 'train sequences')

print(len(x_test), 'test sequences')

#confirm that the shape is consistent with max_document_lenght = 800

print('x_train shape:', x_train.shape)

print('x_test shape:', x_test.shape)

Now we've converted our training and test sets into arrays. At this point, we can use Keras.

max_words = MAX_DOCUMENT_LENGTH

batch_size = 32

#An epoch is a full pass over your training data

#this one will pass over the training set 5 times

#In 32 batches

epochs = 5

Specify the target class, in this case the Amazon Review Scores:

#number of target classes

num_classes = np.max(y_train)+1

print(num_classes, 'classes')

print('Convert class vector to binary class matrix '

'(for use with categorical_crossentropy)')

y_train = keras.utils.to_categorical(y_train, num_classes)

y_test = keras.utils.to_categorical(y_test, num_classes)

print('y_train shape:', y_train.shape)

print('y_test shape:', y_test.shape)

Build the model

print('Building model...')

model = Sequential()

model.add(Dense(512, input_shape=(max_words,)))

model.add(Activation('relu'))

model.add(Dropout(0.5))

model.add(Dense(num_classes))

model.add(Activation('softmax'))

This is a pretty simple model with one layer that accepts the inputs (max_words) and applies the 'relu' method for activation, then adds a dropout layer which mitigates overfitting, and then a third layer that applies the softmax regression. See: https://keras.io/getting-started/sequential-model-guide/

Next, the model needs to compiled using an optimizer, specifying the loss function which is what the model will try to minimize, and the metrics, here it's accuracy.

model.compile(loss='categorical_crossentropy',

optimizer='adam',

metrics=['accuracy'])

print("--- %s seconds ---" % (time.time() - start_time))

#timed it at around 8 minutes

history = model.fit(x_train, y_train,

batch_size=batch_size,

epochs=epochs,

verbose=1,

validation_split=0.1)

print("--- %s seconds ---" % (time.time() - start_time))

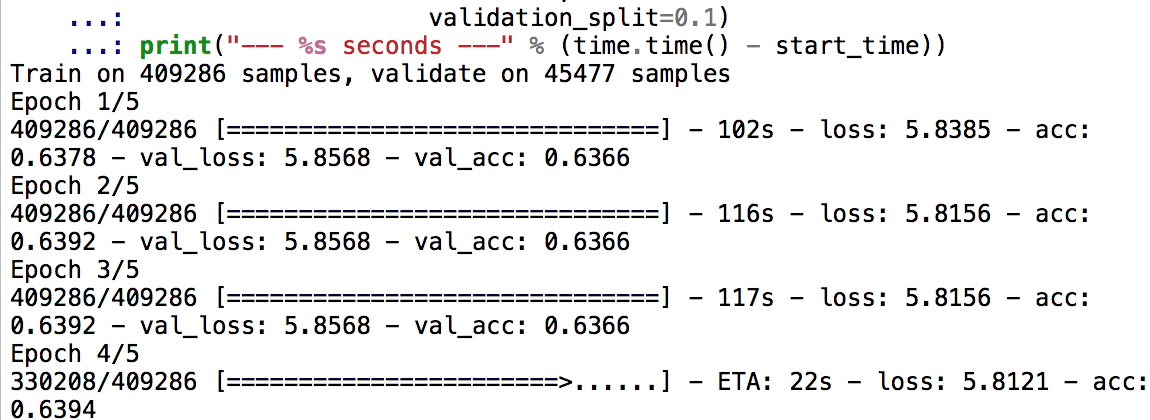

At this point, the model is being fit and run on 5 epochs:

score = model.evaluate(x_test, y_test,

batch_size=batch_size, verbose=1)

print("--- %s seconds ---" % (time.time() - start_time))

print('Test score:', score[0])

print('Test accuracy:', score[1])

Here, we have Test accuracy: 0.638221143275, and can view the predicted classes and their probabilities:

model.predict_classes(x_test, batch_size=batch_size, verbose=1)

model.predict_proba(x_test, batch_size=batch_size, verbose=1)

array([[ 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 1.],

...,

[ 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0., 1.]], dtype=float32)

Well, this isn't a very good accuracy. Probably a result of my first step in learning keras and deep learning. I think as a next step is to add an "Embedding" layer per some previous tutorials I've read regarding text, and spending greater time on the layers. Welcome any thoughts and feedback!